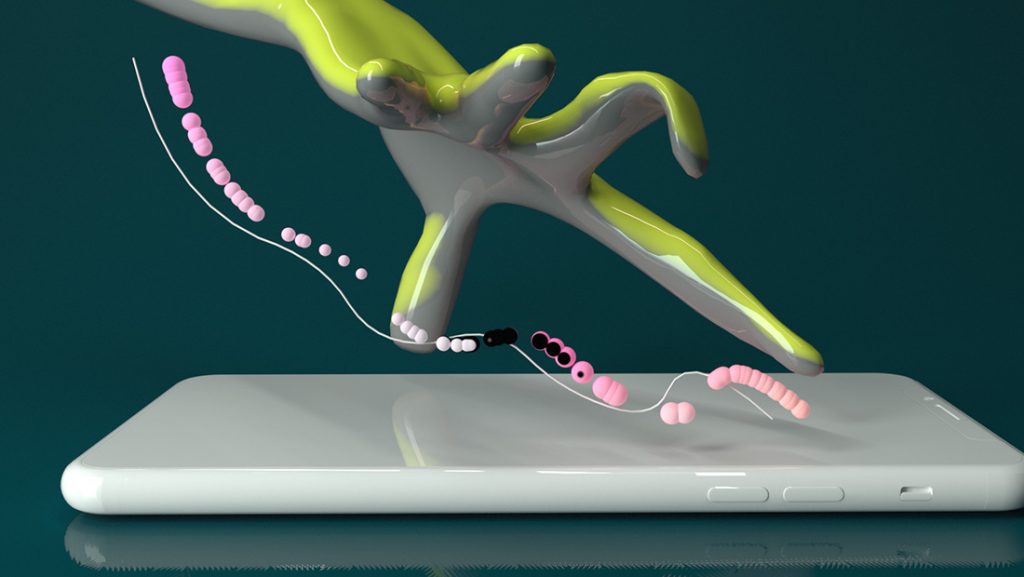

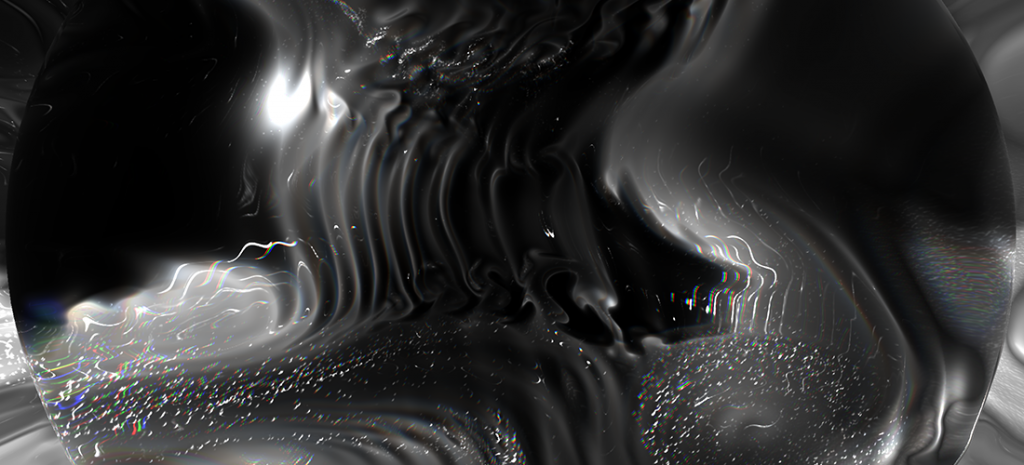

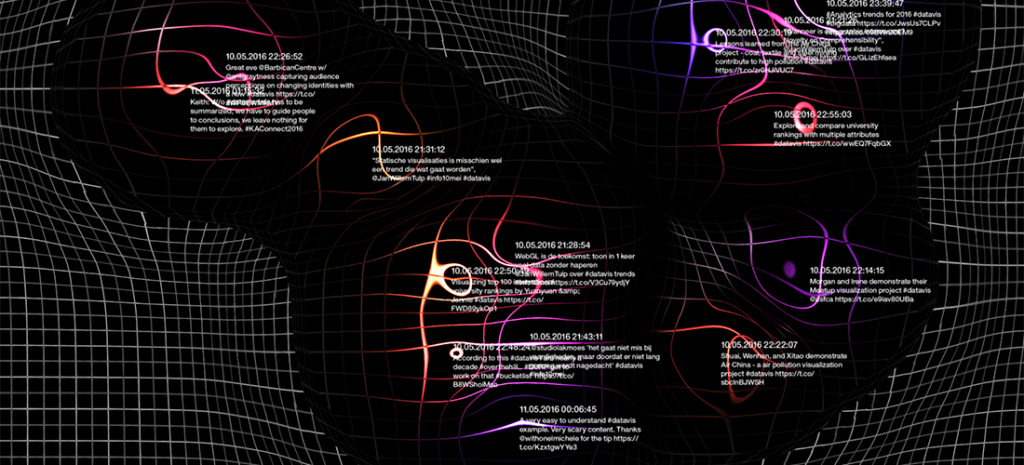

Soap and Milk is an interactive experience of data, allowing the observer to perceive social media as an overwhelming and organic figment. Each microscopic droplet represents a tweet that refers to the installation. Once an entity gets spawned – the viewer is invited to physically interact and explore its behaviour, until they vanish into a vivid setting.

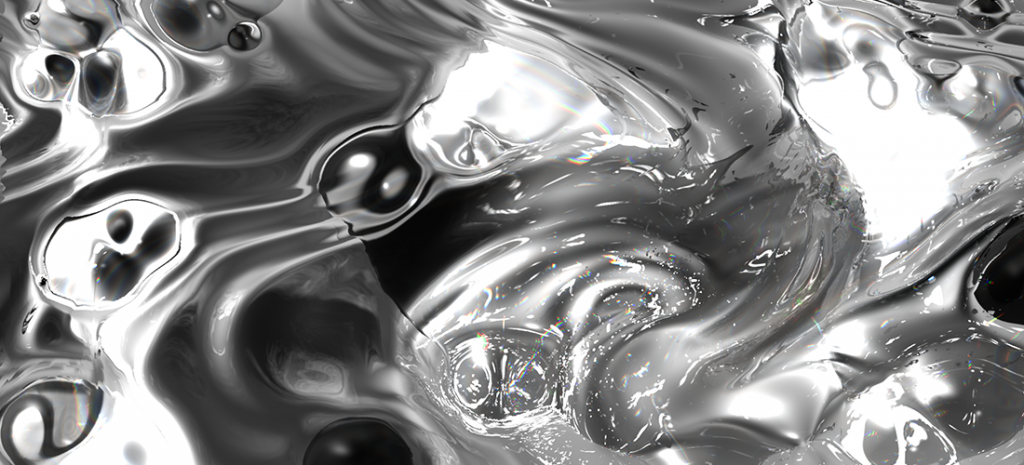

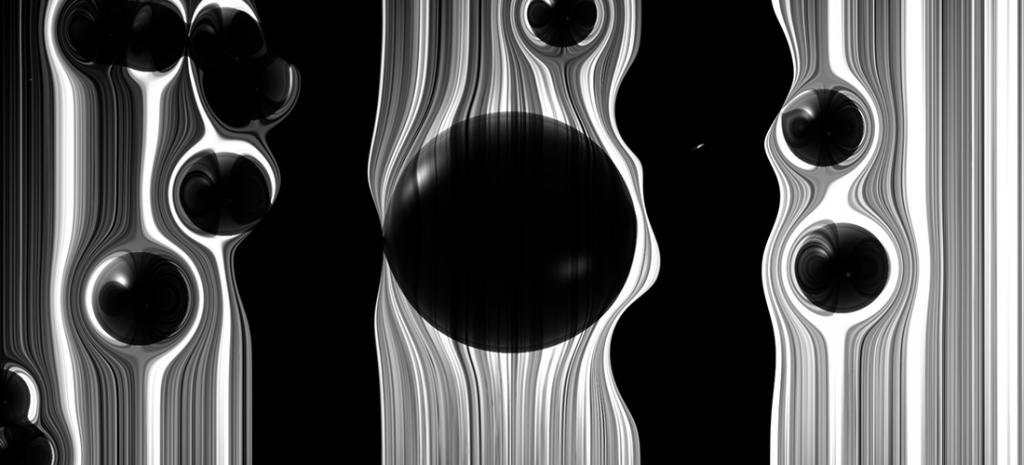

Soap and milk is a visual experience to portray information in social media as an organic landscape of fluids. We envisioned droplets spawning on surreal liquid surfaces, melting and interacting with each other till they fade into the depth of their micro cosmos. Tweets referring to a predefined hashtag, seem to become alive as bubbles as they bounce, playfully simplified and yet unpredictable within their behaviour.

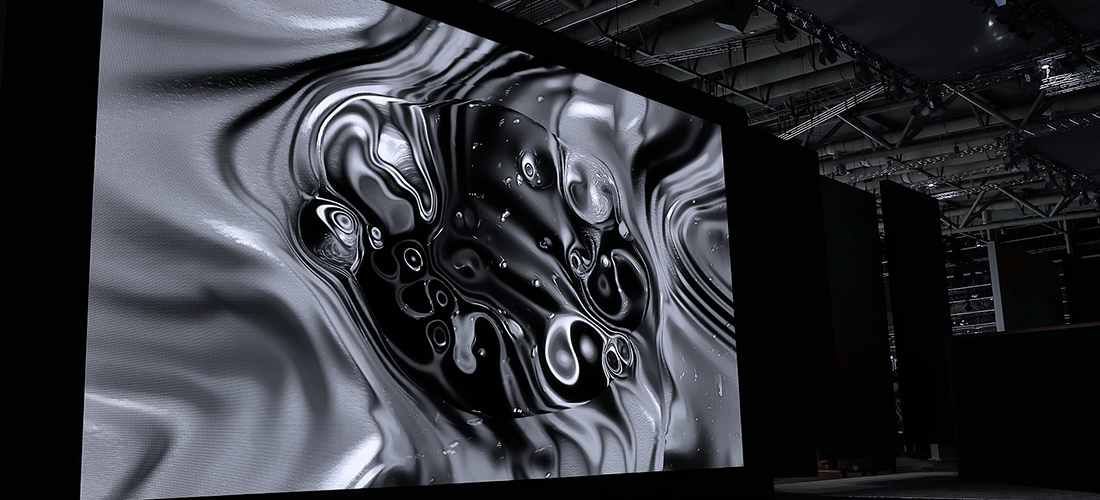

To witness the lifespan of this data, we wanted the observer to be able to touch and manipulate its visual representation. The installation is designed as a visual oxymoron, between a larger-than-life experience and the microscopic insight into interacting droplets. By using a giant LED screen to expose the interactions of tiny bubbles, we wanted the viewer to shrink into the fluids – allowing new perspectives and interactions towards complex and detailed procedures.

The visual elements of soap and milk consist of more than forty layers of different rendering effects and GPU based calculations. Techniques such as fluid calculations, compute shaders, raymarching, ambient occlusion, texture effects and post-processing combine to a surreal look and feel of detailed and fragile impressions. No polygons were used for the implementation of the graphics. All visual layers consist of fragment shaders, allowing us an accurate calculation of shapes, shadows and reflections for each pixel. Hence we were able to create the illusion of endless details. The efficiency of the DX11 render pipeline of vvvv, enabled us to quickly investigate, experiment and retry different visual scenarios and concepts.

We used an industrial infrared camera by ximea to capture the movements of the users. Our system is able to shoot 2k videos in 170 frames per second, allowing our algorithm to never lose track of a moving body or its motion. In order to process this data, we wrote a custom, gpu based optical flow solution to analyse and compare 2 million pixels in under 5 milliseconds. Within our research we found high framerate and resolution to be more than just a boost of quality. Basic Computer vision for interactive systems is often about visual debugging. The coder perceives a hand or a body within a camera image – and ports his skill of perception to the algorithm. Hence the implementation is limited to the perception of the engineer. In the case of 170 fps, our solution reconstructs more than the human eye is able to see. The resulting data reveals even the tiniest and fastest motion, allowing the user to feel his dynamics immediately.

The whole system runs on one computer, equipped with a strong processor and a Titan X. The camera image is uploaded to GPU, as soon as a new frame is detected. From this point on, all tasks ranging from image analysis to real-time rendering is performed on GPU only – to avoid bottlenecks of data processing and latency. The motion detection is done in Openframeworks and the rendering passes in vvvv. The communication between the applications is realized via Spout.